Submissions¶

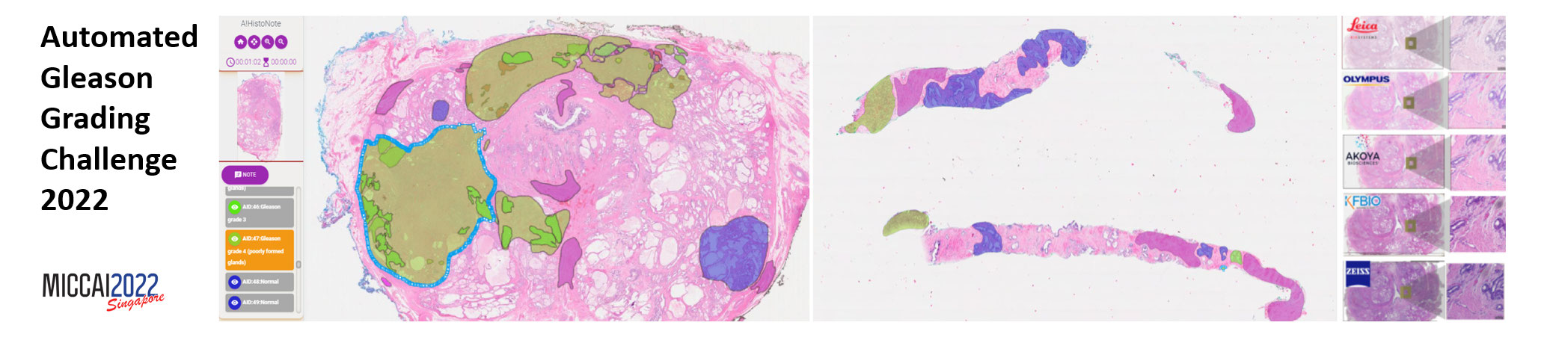

1. Participants should generate an index image (index 0 = background (empty), index 1= Stroma, index 2 =Normal, index 3 = G3, index 4=G4,index 5 = G5) for each test image.

2. It should be resized to 2x for submission (i.e. downsize by 10 times. If the size of the image is not an integer when it is divided by 10, please use the ceil function when calculating the output image size. ). Results will be considered invalid if the submitted image are not as the exact same size as the corresponding H&E image at 2x.

3. The *weighted-average F1-score *will be calculated for every image in the test set (only pixels within the ground truth annotations are considered). The leaderboard is sorted based on highest weighted-average F1-score.

4. Besides submitting the test results, participants are required to submit a short manuscript to describe their methods. All team members are supposed to be listed as authors. We will try to list the names of all authors when we announce the final rankings on the challenge webpage.

Details of submission will be announced soon.

Metric¶

Weighted-average F1-score of detection results with respect to Ground Truth pixel-level annotations: calculate the precision and recall for each class. The ground-truth annotations are not exhaustive and pathologists might not annotate all the relevant regions. Therefore, in the generated index image, only the region that is within the Ground Truth annotated region are valid for assessment.

Weighted-average F1-score = 0.25 * F1-score_G3 + 0.25 * F1-score_G4 +0.25 * F1-score_G5 +0.125 * F1-score_Normal +0.125 * F1-score_Stroma, where:

F1-score=2×Precision×Recall/(Precision+ Recall);Precision=TP/(TP+FP);Recall=TP/(TP+FN)

Ranking method¶

Ranking by final score:

Final_score = 0.6* weighted F1-score_subset_1 + 0.2* weighted F1-score_subset_2 + 0.2* weighted F1-score _subset_3

Since subset 2 and 3 are much smaller than subset 1, the performances on subset 2 and 3 are less indicative. Therefore, we give lower weight for these two subsets.

To access the evaluation code that we will use in the challenge, take a look at our GitHub page (jydada/AGGC2022: Evaluation Code (github.com)). Note: Code is updated on 2022/6/2